Saturday, December 30, 2006

ACSLS Not Really Ready For Library Sharing!

So we have a large ACSLS library at one of our data centers and we decided to use library sharing with 5.3. Tivoli stated that in 5.3 ACSLS is now supported in a library sharing environment. So we setup library sharing and proceeded to also setup DRM to handle our offsite tape rotation. Now I have used IBM equipment almost exclusively when I was with IBM so this ACSLS stuff is new to me, so when MOVE DRMEDIA did not seem to be working I immediately thought it was an ACSLS issue. The problem was that when we ran a MOVE DRMEDIA the library would only checkout one tape at a time. We have two 40 tape I/O doors so why it would only check out one tape at a time was puzzling(it would wait until the tape was removed from the I/O door before checking out the next tape). So I called Sun/STK and was told it was a software issue. So when I called Tivoli it took days before someone found the issue was in fact TSM. The APAR is IC45537 which states that TSM library clients do not currently support the concurrent checkout of multiple volumes. There was a local work around which entailed using MOVE DRMEDIA REMOVE=NO from the library client, checking them back in on the library controller, and then checking them out on the library controller. It's a mess of a script but it works. This APAR was released in April of 2005 yet I am still having to use a local work around. It just drives me nuts! Especially because the problem was not one that even Tivoli support easily found. Let's hope there is an actual fix in 5.4 but I'm not betting on it.

Tuesday, December 19, 2006

MOVE DRMEDIA from shared ACSLS library

OK, so I my work has an ACSLS library shared by 7 instances of TSM and my move drmedia commands fail. I have tried it with and without the cap= option and also have used remove=bulk and remove=yes. The volumes fail to checkout and the ACSLS documentation I have found so far does not cover shared ACSLS checkout. Any help is appreciated.

Monday, November 27, 2006

GPFS Revisited

Well I am still having issues with GPFS. It turned out the mmbackup wont work with the filesystem size either and a chat with IBM support was not encouraging. Here is what one of our System Admins found out:

The problem was eventually resolved by IBM GPFS developers. It turns out, they never thought their filesystem would be used in this configuration (i.e. 100,000,000 + inodes on a 200GB filesystem). During the time the filesystem was down, we tried multiple times to copy the data off to a different disk. Due to the sheer number of files on the filesystem, every attempt failed. For instance, I found the following commands would have taken weeks to complete:

# cd $src;find . -depth -print | cpio -pamd $dest

# cd $src; tar cf - . | (cd $dest; tar xf -)

Even with the snapshot, I dont think TSM is going to be able to solve this one. This will probably need to be done at the EMC level, where a bit level copy can be made.

So GPFS is not all it was thought to be. So pass it along and make sure you avoid GPFS for application that will produce large numbers of files.

The problem was eventually resolved by IBM GPFS developers. It turns out, they never thought their filesystem would be used in this configuration (i.e. 100,000,000 + inodes on a 200GB filesystem). During the time the filesystem was down, we tried multiple times to copy the data off to a different disk. Due to the sheer number of files on the filesystem, every attempt failed. For instance, I found the following commands would have taken weeks to complete:

# cd $src;find . -depth -print | cpio -pamd $dest

# cd $src; tar cf - . | (cd $dest; tar xf -)

Even with the snapshot, I dont think TSM is going to be able to solve this one. This will probably need to be done at the EMC level, where a bit level copy can be made.

So GPFS is not all it was thought to be. So pass it along and make sure you avoid GPFS for application that will produce large numbers of files.

Monday, November 06, 2006

Looking For Contributors

If you work in a large TSM environment and have experienced issues or tasks that you think passing along would help others please let me know. I am looking for contributors to keep this blog current and make it more open. Send me an e-mail with contribution ideas (you'll need more than 1) and what type of environment you work in.

CDP For Unix?

Has anyone heard of when (if ever) Continuous Data Protection for Files will be available on the Unix platform? I could really use this feature with my GPFS system. Since the application creates hundreds of meta data files daily and is proprietary (hence no TDP support) I am getting killed by the backup timeframe since the each volume has in excess of 4 million files already and incrementals take close to 48hrs. to finish. Anyone heard anything at symposiums or seminars?

Tuesday, October 17, 2006

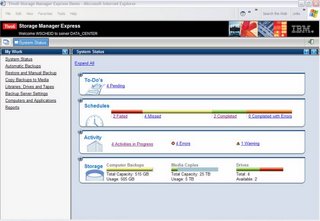

A Sit Down With ISC Admin Developers

The wonderful people with GUI/Web development at IBM were kind enough to have a conference call with me to discuss issues with the TSM admin interface. They have read my posts and thought I might have a good idea as to why so many people dislike the interface. The truth is, a central console to manage your TSM servers is a better paradigm than a web server per TSM instance process. What was agreed across the board is the problem with WebSphere. They made it sound like they are trying to move to a TSM Express model, which TSM Express uses a WebSphere Lite, and is lot more responsive. The current WebSphere is just too big and slow for the needs of TSM admins.

The other problem with the ISC that is very apparent is the inability to view selected content in the viewable area. See when you select certain commands the results of selecting that command many times will not appear on the visible screen, but below requiring scrolling. Let's face it not everyone managing TSM is an expert so they might miss it and think something is wrong with the interface. The good (and some consider bad) of the old interface was that a selection or command was instantly seen in the results frame. It wasn't perfect but it was more functional than the current model. The other issue, I believe was agreed upon, was the resolution used for the screens. The resolution needs to either be fluid like this blog (meaning resizing itself for various screen resolutions without going beyond the borders of the browser) or set to a specific resolution. The recommended resolution for the web to meet 99% of the users out there is 800x600. I know many of you might think that 800x600 is low, but it can be shown in almost every user’s browser. The ISC however seems built for a resolution of 1280x1024. This doesn't work because many people can't go beyond 1024x768 (like me with my laptop). With items off the screen it makes it harder to use the interface since things like the collapsible section button (minimize) are off the screen. I also suggested that the Libraries for All Servers section be a separate tab that changes color if one of the libraries is having issues. It gets in the way having it there at all times. Sure you can minimize it, but if you select another function it resets itself. I would also like to see the TSM servers easily definable. One of the issues I had is that every admin has to define their own servers to the ISC. So the same server ends up being defined multiple times when a single definition would do. If you can’t login who cares if you can see it? I also think they need to move the servers to an expandable list along the side frame so that you can select the server you want to work with and the context frame switches to that server content.

Another example of how they made the selection more complex is working with client scheduling. Tivoli took away the scheduling link and changed it so that to access client schedules you first select domains, then select the server you want to work with, choose the domain where you think the schedule is, then below the domain list you will see multiple options you can select and one of those will be Client Node Schedules, select that then you can choose the schedules you want to work with. Now after selecting the schedule you want to work with that took 5 selections to get to the schedule you need. And they are under domain so you don’t see a full list of schedules like before. So if you choose the wrong domain…start the process over. I also think they need to add an color coded error window like TSM Manager has. It is so helpful its amazing!

I would like to thank the IBM people who listened to my suggestions. I find it refreshing to see IBM working hard to fix issues to make the users experience more productive. I will admit I really want a central management console (that's why I love TSM Manager) and a streamlined, fast, and simple ISC will definitely win me over. Especially now since my new work place doesn't have TSM Manager! :(

Note: Please leave your own feedback in the comments section so that the TSM ISC Admin Development team can hear what you have to say. I know they would like feedback from more than me.

The other problem with the ISC that is very apparent is the inability to view selected content in the viewable area. See when you select certain commands the results of selecting that command many times will not appear on the visible screen, but below requiring scrolling. Let's face it not everyone managing TSM is an expert so they might miss it and think something is wrong with the interface. The good (and some consider bad) of the old interface was that a selection or command was instantly seen in the results frame. It wasn't perfect but it was more functional than the current model. The other issue, I believe was agreed upon, was the resolution used for the screens. The resolution needs to either be fluid like this blog (meaning resizing itself for various screen resolutions without going beyond the borders of the browser) or set to a specific resolution. The recommended resolution for the web to meet 99% of the users out there is 800x600. I know many of you might think that 800x600 is low, but it can be shown in almost every user’s browser. The ISC however seems built for a resolution of 1280x1024. This doesn't work because many people can't go beyond 1024x768 (like me with my laptop). With items off the screen it makes it harder to use the interface since things like the collapsible section button (minimize) are off the screen. I also suggested that the Libraries for All Servers section be a separate tab that changes color if one of the libraries is having issues. It gets in the way having it there at all times. Sure you can minimize it, but if you select another function it resets itself. I would also like to see the TSM servers easily definable. One of the issues I had is that every admin has to define their own servers to the ISC. So the same server ends up being defined multiple times when a single definition would do. If you can’t login who cares if you can see it? I also think they need to move the servers to an expandable list along the side frame so that you can select the server you want to work with and the context frame switches to that server content.

Another example of how they made the selection more complex is working with client scheduling. Tivoli took away the scheduling link and changed it so that to access client schedules you first select domains, then select the server you want to work with, choose the domain where you think the schedule is, then below the domain list you will see multiple options you can select and one of those will be Client Node Schedules, select that then you can choose the schedules you want to work with. Now after selecting the schedule you want to work with that took 5 selections to get to the schedule you need. And they are under domain so you don’t see a full list of schedules like before. So if you choose the wrong domain…start the process over. I also think they need to add an color coded error window like TSM Manager has. It is so helpful its amazing!

I would like to thank the IBM people who listened to my suggestions. I find it refreshing to see IBM working hard to fix issues to make the users experience more productive. I will admit I really want a central management console (that's why I love TSM Manager) and a streamlined, fast, and simple ISC will definitely win me over. Especially now since my new work place doesn't have TSM Manager! :(

Note: Please leave your own feedback in the comments section so that the TSM ISC Admin Development team can hear what you have to say. I know they would like feedback from more than me.

Monday, October 09, 2006

Unsupported Debian Client Available

Thanks to Harry Redl for the Debian client he has put together. It took him some work but now you can have your Ubuntu and back it up too. According to Harry at adsm.org the package works on Debian Woody, Debian Sarge and Ubuntu 6.06. As Harry stated the package is not supported by IBM and USE AT YOUR OWN RISK! All disclaimers and such apply with this package. No claim is made to its reliability blah blah blah! You hopefully get the idea. Anyway, Harry uses it in his 99% debian shop without a problem...which brings up the following question. Harry, what OS does your TSM server run on?

Sunday, October 08, 2006

TSMManager 4.1

Back in June of 2005 I posted a review of TSMManager 4.0. When I was with IBM I had convinced management to purchase the product and we got a sweetheart deal to boot. I was initially drawn to the nice admin console window. As you'll see in the picture below when you open an admin console you are presented with a tabbed window that has a number of different views, one per tab. The first tab is the ALL MESSAGES tab that is basically an active view of the TSM servers activity log. The second tab is the most useful and important tab within TSMManager, in fact it is what makes TSMManager my favorite tool to use. This tab is the ERROR MESSAGES tab and it basically filters the TSM activity log to show only WARNING, ERROR, and SEVERE ERROR messages. Why is this the most important piece to TSMManager? Because, with this one tab 95+% of the time I can deduce a problem within in a minute or two. For example in the provided screen shot you can see we had a number of drive errors, but look closer and you see it's one tape causing the problem. This took me a minute to figure out and I easily resolved it by removing the tape. The next tab I rarely use but it can come in handy. It is the SPECIAL MESSAGES tab and it covers ALL the servers not just the one you have selected to manage at the moment. It really lists the processes and there start and end times. You might use the tab to lookup processes and how they ran, but I haven't found a real use for this tab yet. The next tab is the actual TSM Admin command line. You can issue commands or use the quick commands provided on the side. TSMManager will store the last 20+ commands in a buffer for recall. Overall this window and its tabs are worth the cost of the product in its productivity enhancement.

This is the error tab. Click on it and you'll see how it consolidates all errors into a simple interface. Great for those times you're having server issues.

This is the error tab. Click on it and you'll see how it consolidates all errors into a simple interface. Great for those times you're having server issues.

Thursday, October 05, 2006

ANR9999D Error Restoring From Backupsets

We have a site that uses backupsets for extended retention (which I hate, but that's beside the point). I had a number of restores (and "QUERY BACKUPSETCONTENTS" commands) that were failing with the error "Command failed – internal server error detected." Further research into the problem revealed only that 9999D means "thread terminated", with no other specific information.

After a call to Tivoli support, and some exchanging of some logs, it was determined that tape containing the backupset was not in the library. When the "request" timer would expire, the server would terminate the thread, but no "message" would return. This includes running commands from the server and client command line interfaces. You can replicate the error by running the command in one window, then canceling the request on the server in another. The Tivoli rep said that he would send it to the developers, so this may be "fixed" to include a proper error message in a future version.

After a call to Tivoli support, and some exchanging of some logs, it was determined that tape containing the backupset was not in the library. When the "request" timer would expire, the server would terminate the thread, but no "message" would return. This includes running commands from the server and client command line interfaces. You can replicate the error by running the command in one window, then canceling the request on the server in another. The Tivoli rep said that he would send it to the developers, so this may be "fixed" to include a proper error message in a future version.

Wednesday, October 04, 2006

New Look

Well as you can see I went with a new plain 3 column look in hopes of making TSMExpert easier to use. If you have any suggestions for the site let me know I will definitely take them into consideration.

EMC & NDMP

Does anyone know the answer to this question? I have an EMC Clariion and it looks like the easiest way to backup some problem file systems is by NDMP. Does EMC Clariion support NDMP? Is there any specific configuration that does or do they all support it natively? Does it need a Celerra NAS head? Any information from EMC knowledgeable people is appreciated.

Monday, September 25, 2006

Why Use GPFS?

So we have GPFS enabled systems and an application that uses these file systems to store MILLIONS of very small files. The GPFS file system is supposed to allow multiple systems to share a filespace and data, but we continually have issues with backups. A lot of it is due to the number of files (9+ million is one file system alone), but also with the memory utilization of the scheduler. The system has only 4 GB of memory and when the scheduler is running it consumes at least 1GB. So my question is wouldn't a NAS based file system work just as well? Granted the GPFS file systems look local on each server, and in all respects are local, but the difference can't be that big especially when the majority of the data is under 10K in size (mostly under 1K to be exact). So anyone have experience with GPFS to state otherwise?

Thursday, August 31, 2006

How Tivoli Can Fix The ISC

I thought about it and instead of just complaining I figured I'd offer my two cents. The beauty of the old web interface was that it was simple and fast at refreshing (usually). The new ISC is slow in this regard, and that is where the problem lies. The idea of a central monitoring tool is wonderful. The problem is in the response time. OK! So how can they fix it? AJAX! Yes with Asynchronous Javascript And XML there would be no reloads. Updates would happen dynamically and the ISC Admin Center would actually be amazing! Don't believe me? Look at what a lot of the Web 2.0 apps are using, that's right AJAX! It's turns a ho-hum web app into a true desktop app that you would forget is running in your browser. Is it in the works? My bets are on NO! But, hey someone has to have dreams!

Wednesday, August 30, 2006

Search TSMExpert

OK! So I have covered a lot of topics and the way blogs work does not easily lend itself to find the info you might need. I would agree with you except that Blogger is hosted by Google, which means you kind find anything you need if you search for it! Use the Google search box on the right and select TSMExpert.blogspot.com. Google then searches through this website to find the topics that might best suit your needs. If you need NDMP info or have questions on the LVSA the areas where they have been covered in this blog can easily be found.

Tuesday, August 29, 2006

TSM AIX Performance Issue With ML05

I found this APAR interesting and thought I would pass it along. There seems to be a bug in the TSM client for AIX when updated to ML05 and Direct I/O is in use on the filesystems. I can't explain the details well enough so read the post here. This bug can cause significant increases in backup time. They give a good example in the APAR description.

Monday, August 28, 2006

Disk Question

Ok so in the DS4000 Redbook (page 153) they give a TSM example for setting up disk and they describe using a RAID 10 configuration. What!?! I've always heard and taught that TSM should use TSM mirroring since the extra DB copies are used for writing to help with performance and TSM keeps them all sync'd. So why would I create two RAID 10 arrays and let the DS4000 handle the mirroring? Why not create two RAID 1 arrays and mirror thru TSM? This has implications not only on the DS4000 series but on every high-end enterprise disk out there. So which is it IBM?

TSM Admin Center Blues!

OK so I used TSMManager when I was with IBM and at the new account, I must say, I see the headaches involved when you don't have a central management tool. I am trying to configure my servers on the ISC Admin Center and it is a piece-o-crap! I don't think anyone at Tivoli or IBM did a usability study. If so they would not have moved to the new interface, or they would have made it a lot better. Granted the ISC gives you a central management tool, but it is so unintuitive and slow I find myself grinding my teeth together using it. Now we are almost two years into the ISC and it still sucks so what gives? Anyone have anything positive or hints to make it somewhat useable? It's slow, clunky, and not intuitive. Use the Operational Reporting tool you say? Forget it! It doesn’t even look like they are moving forward with it on 5.3 since it still has a web option. I just have one question for developers? What is going on?

Tuesday, August 22, 2006

Any Advice On ACSLS?

So I have a new job with a new company doing TSM work and they have a STK library using ACSLS. Since I have never used ACSLS and am not sure why it doesn’t have a web interface I am looking for pointers from you all as to tips, tricks, or things I should now when using it. One question I have is why did they make a management tool that has to run on a totally separate piece of hardware? STK has stuck with ACSLS for a long time so I am assuming it must be somewhat worthwhile. Is it?

Thursday, August 10, 2006

Intro and VSS information

Hello to all you readers of TSMEXPERT out there. My name is Jared and I've been ask to join by my good friend Chad (who is leaving the job he helped me get!) and post some of the Windows stuff that I've been doing with TSM lately. I put together a PPT presentation that talks about VSS and TSM. It might not be that helpful but it was useful to our team here. I have spent many hours trying to fix various TSM and VSS issues over the past couple years and this is a result of that work. I will warn you that some of this is "editorialized" and those parts should be rather obvious.

Without further ado, click here to download it.

Without further ado, click here to download it.

Leaving IBM

Friday will be my last day with IBM. It’s been a great 5 years, but I realized that the only way my career would grow was to make a change. I have learned a lot and am thankful for the opportunity IBM provided. My new employer will be Infocrossing, and I look forward to the challenges that I will encounter. For one it won’t be a majority IBM hardware user so I will be able to get my hands dirty with ACSLS and STK libraries. I love IBM equipment so it will be interesting to see what it’s like in the non-IBM world. I will still be doing TSM so this blog is not going anywhere.

Friday, August 04, 2006

TSM V5.4 Enhancements

TSM V5.4 Enhancements Selected for Beta Account Validation

Availability:

Cluster Failover for TSM HSM for Windows (available October, 2006)

Capability:

TSM Express to TSM Enterprise upgrade

Backupset Enhancements

Compatibility:

Support for Windows Vista (available November, 2006)

Support for Intel based Macintosh systems

Interoperability:

Using TSM Server as an NDMP Tape Server (Filer to Server to backup)

Create Media for offsite vaulting of NDMP Generate Data

Performance:

Reconciliation for TSM HSM for Windows (available October, 2006)

Scalability:

Improved BA client memory usage

Administrator Center Enhancements Part 3

Availability:

Cluster Failover for TSM HSM for Windows (available October, 2006)

Capability:

TSM Express to TSM Enterprise upgrade

Backupset Enhancements

- Generate to a specified point-in-time

- Improve tracking,

- Stack multiple nodes' data on a single set volumes,

- Enable the client to display contents and allow selection of individual files for restore,

- Support image data,

- And more

Compatibility:

Support for Windows Vista (available November, 2006)

Support for Intel based Macintosh systems

Interoperability:

Using TSM Server as an NDMP Tape Server (Filer to Server to backup)

Create Media for offsite vaulting of NDMP Generate Data

Performance:

Reconciliation for TSM HSM for Windows (available October, 2006)

Scalability:

Improved BA client memory usage

- Note: Using the disk cache setting will require additional disk space. The disk cache file can exceed 4 gigabytes for a very large filesystem incremental backup. Therefore, if the filesystem does not support large files, the user will need to enable large file support for the filesystem or use the diskcachelocation setting to point to a filesystem that can support large files.

- Enhancement provides a new type of storage pool for storing only active versions of backup client data.

Administrator Center Enhancements Part 3

- Update administrator password, cmd line applet, AUDIT LIBRARY/VOLUME, QUERY NODEDATA/MEDIA, MOVE NODEDATA/MEDIA

Tuesday, July 18, 2006

Have You Checked Your Drive Firmware Lately?

Well the weekend is over and I thought I would update you on the problem SAP system. In the last update we had discussed how this one TSM server was consistently having its mounts go into a RESERVED and hanging. The only thing we could do was cycle the TSM server. So after talking to support they stated it was a known problem listed in APAR IC49066 and fixed in the TSM server 5.3.3.2 release. So we upgraded the TSM server, updated ATAPE on the problem server to the same level as on the controller, and turned on SANDISCOVERY. The system came back up and started to mount tapes, albeit slowly. So we let it run and all seemed well for about the first 8 hours then all hell broke loose. TSM started taking longer and longer to mount tapes until it couldn’t mount anything. We began receiving the following errors also:

7/13/2006 9:38:45 AM ANR8779E Unable to open drive /dev/rmt25, error number=16.

7/13/2006 9:38:45 AM ANR8311E An I/O error occurred while accessing drive DR25 (/dev/rmt25) for SETMODE operation, errno = 9.

We reopened the problem ticket and talked to a number of Tivoli support reps and almost had to force them to have us run a trace. The original problem fix began Friday and here we were still trying to fix it well into early Sunday morning. So after getting the trace to Tivoli they looked it over and seemed perplexed at the errors. The errors seemed as if Tivoli was trying to mount tapes for drives that the library client was not pathed for. Also it seemed TSM was polling the library for drives but was unable to get a response so it went into a polling loop until it found a drive. This caused our mount queue to get as high as 100+ tape mounts waiting and the mounts that did complete sometimes took 30-45 minutes to do so. It was NUTS! So Sunday morning a new Tivoli Rep was assigned (John Wang). As we discussed the problem and Tivoli was trying to get a developer to analyze the trace John mentioned how he had seen this type of error before in a call about an LTO-1 library. He stated that it took 3 weeks to determine the problem but that the end result was that the firmware on the drives was down-level and causing the mount issues. So I checked my 3584’s web interface (I feel bad for all those people out there without web interfaces on their libraries) and found the drive firmware level at 57F7. This seemed down-level from what little information we had so I had my oncall person call 1-800-IBM-SERV and place a SEV-1 service call. The CE called me and we discussed the firmware level. When he saw how down-level it was he was surprised and lectured me on making sure we always check with CE’s before we do any changes to the environment. The CE then gathered his needed software and came to the account to update the drives. I brought all the TSM servers down and after 30 minutes the library had dismounted all tapes from the drives. The CE then proceeded to update the firmware, which actually only took 15-20 minutes. I expected longer. So we went from firmware level 57F7 to 64D0. Huge jump! So after the firmware upgrade I audited the library and brought the controller back up. Viola! It started mounting tapes as soon as the library initialized and the response was back to what it should have been. It’s now Tuesday morning and there have been no problems. So before you upgrade TSM be sure to have checked your libraries firmware (both library and drives). It could mean the difference between sink and swim!

7/13/2006 9:38:45 AM ANR8779E Unable to open drive /dev/rmt25, error number=16.

7/13/2006 9:38:45 AM ANR8311E An I/O error occurred while accessing drive DR25 (/dev/rmt25) for SETMODE operation, errno = 9.

We reopened the problem ticket and talked to a number of Tivoli support reps and almost had to force them to have us run a trace. The original problem fix began Friday and here we were still trying to fix it well into early Sunday morning. So after getting the trace to Tivoli they looked it over and seemed perplexed at the errors. The errors seemed as if Tivoli was trying to mount tapes for drives that the library client was not pathed for. Also it seemed TSM was polling the library for drives but was unable to get a response so it went into a polling loop until it found a drive. This caused our mount queue to get as high as 100+ tape mounts waiting and the mounts that did complete sometimes took 30-45 minutes to do so. It was NUTS! So Sunday morning a new Tivoli Rep was assigned (John Wang). As we discussed the problem and Tivoli was trying to get a developer to analyze the trace John mentioned how he had seen this type of error before in a call about an LTO-1 library. He stated that it took 3 weeks to determine the problem but that the end result was that the firmware on the drives was down-level and causing the mount issues. So I checked my 3584’s web interface (I feel bad for all those people out there without web interfaces on their libraries) and found the drive firmware level at 57F7. This seemed down-level from what little information we had so I had my oncall person call 1-800-IBM-SERV and place a SEV-1 service call. The CE called me and we discussed the firmware level. When he saw how down-level it was he was surprised and lectured me on making sure we always check with CE’s before we do any changes to the environment. The CE then gathered his needed software and came to the account to update the drives. I brought all the TSM servers down and after 30 minutes the library had dismounted all tapes from the drives. The CE then proceeded to update the firmware, which actually only took 15-20 minutes. I expected longer. So we went from firmware level 57F7 to 64D0. Huge jump! So after the firmware upgrade I audited the library and brought the controller back up. Viola! It started mounting tapes as soon as the library initialized and the response was back to what it should have been. It’s now Tuesday morning and there have been no problems. So before you upgrade TSM be sure to have checked your libraries firmware (both library and drives). It could mean the difference between sink and swim!

Saturday, July 15, 2006

Shared Library Problem Solved?

Well after trying numerous things to get the problematic shared library working I finally got support on the line to fix this problem. The other day the problem reoccurred after about a week. I thought I had fixed the problem by changing the IP/VLAN the servers communicated over, and I thought it was until it all came crashing down. So I upgraded the problem instance to the same release level as the controller (controller has to be at or higher then the client servers). That didn’t fix it! I turned RESETDRIVES to NO and that didn’t do it either (Thanks for the advice Scott). So we got Tivoli on the line and Andy Ruhl with Tivoli support worked with us to identify the problem. First thing we did was check the ATAPE level and it turned out the controller was at a higher version. So we updated it to the same level as the controller. Then Andy found this APAR IC49066 which interestingly enough describes my problem. Turns out there was a known issue with this type of behavior and although it was due to be fixed in 5.3.4 it was added to the 5.3.3.2 update. Supposedly the explanation states it occurs when the client is accessing two different controllers, but Andy stated it was a misprint and it applies to single controller instances as well. So we updated all 5 TSM servers to the fixed level and hope we don’t experience any more issues. So far so good!

Sunday, July 09, 2006

Help! Problem With Shared Library Client!

Ok, here is my problem. I have a large shared library that supports 5 TSM servers. All of the TSM servers, save one, run without any problems. The one with problems is pathed to 32 of the 5 drives (they are designated just for its use) and periodically its mount requests go into a RESERVED state but never mount. I have tried FORCESYNC'ing both sides to see if it was communication but that didn't work. I have tried setting paths offline then back online and that didn't work either. The only thing that works is to cycle the TSM server then it comes back up and starts mounting tapes like nothing was wrong. This happens on average every 24-48 hours. This is a large DB client TSM instance so the reboots are hurting critical business apps backups. I don't see any glaring errors in the activity log or in the AIX errpt. Any suggestions would be gladly received.

Wednesday, July 05, 2006

Import/Export Question!

Can someone explain to me why moving data (exporting/importing) from one TSM server to another TSM server is such a pain in the neck? Here is my scenario, I have some servers that moved from one location to another, network wise, and they now need to backup to a different TSM server. Both servers use the same media type (LTO-3). So why is it I have to either copy all the data across the network to the new server as it creates new tapes, or dump it all to tape(s) and then rewrite it to new tape(s) when imported? My question is this - why can’t I just export the DB info and pointers for the already existing tape to the new TSM server from the old? Why can’t the old server “hand-over” the tape to the new TSM server? It seems like a lot of wasted work to constantly have to copy the data (server to server export/import) or dump it to tape and then write the data to new tapes. I think the developers ought to work on a way of doing this. I would also think that this process could be done on a DB level so you could in a sense reorg the DB without the long DB Dump/Load and audit process, and the tapes would be handed over to the new TSM server. No rewrites necessary.

Thursday, June 22, 2006

The Linux Big But!

“I love Linux, I support Linux, I would love to use Linux but….” You wont hear this just from me. The problem is a frustrating one. I for one have a number of Linux TSM servers and they have become somewhat of a problem. For example we had a situation where we decided to deploy a Linux TSM server and proceeded to setup the site. Problems arose when we attempted to connect the Library. As it turned out the version of SUSE was down level and the driver would not work. So we upgraded Linux and the library worked fine, but (there it is again) the RAID controller for the server would not longer work and there was no updated one. This has been and continues to be the problem with Linux. Sure companies beat their chests and yell, “We support Linux!” The problem is that it’s limited support and we the users who implement get left with what turns out to be a patchwork solution. I’m sure many will say, “But I have Linux working and it works fine.” Great! The problem is in the choices available to you hardware wise when architecting the solution. I can be pretty sure that I wont run into to many problems when solutioning for AIX, HP/UX, Solaris, or Windows. With Linux you have to do twice the homework and hope the hardware company keeps its drivers up to date. Just to risky for the data. Don’t get me wrong it has gotten better but there is a long way to go before I would commit to using Linux again.

Sunday, June 18, 2006

Unofficial TSM Clients for Linux - Debian

Hi all,

time-to-time I see on various forums questions about TSM client for Debian. IBM itself do not consider Debian being a supported platform, so they do not even plan to create the package. You can find several HOWTO's (mostly created using "alien" package converter) on the web, or you can install rpm and try to solve dependencies on your own.

As our environment is 95%+ Debian based, we created our own .deb package(s). We unpacked the rpm's (both API and BA), created new postinst scripts, sample configs etc. and packed it up in a single package.

Originaly we intended to use them for internal purposes only, but we think someone might find it usefull.

You can find the packages on http://www.adsm.org website - download section

Rules:

1) Package is not an official IBM release, Debian is not supported - use at your own risk

2) read the README ... really .....

3) if you have comment/suggestion/improvement - let me know

Harry

time-to-time I see on various forums questions about TSM client for Debian. IBM itself do not consider Debian being a supported platform, so they do not even plan to create the package. You can find several HOWTO's (mostly created using "alien" package converter) on the web, or you can install rpm and try to solve dependencies on your own.

As our environment is 95%+ Debian based, we created our own .deb package(s). We unpacked the rpm's (both API and BA), created new postinst scripts, sample configs etc. and packed it up in a single package.

Originaly we intended to use them for internal purposes only, but we think someone might find it usefull.

You can find the packages on http://www.adsm.org website - download section

Rules:

1) Package is not an official IBM release, Debian is not supported - use at your own risk

2) read the README ... really .....

3) if you have comment/suggestion/improvement - let me know

Harry

Wednesday, June 14, 2006

System State & Services Backup Issue

I had a problem recently with McAfee and TSM not playing nice together. Jared Annes (co-worker) figured out the problem and this is his finding. What happened was that changes to the default scanning and file protection procedures were made to McAfee to “lock down” the servers from viruses. Unfortunately the SA’s inadvertently caused TSM to fail every system state and system services backup. The culprit was one file, TFTP.EXE in the System32 folder due to McAfee locking the file from being read. What I didn’t expect was that a failure of one file would cause TSM to fail on the whole system state and system services backup. I forgot the system state and services are looked at as one object instead of multiple files. The fix was to disable McAfee, then delete TFTP.EXE from the hidden dllcache folder, also from the System32 folder, and then re-enable McAfee. Since then I have had no more troubles with the system state and services backups.

NOTE: If you don’t delete the file from the dllcache folder Windows will copy it back over to the System32 folder recreating the problem you were trying to fix.

NOTE: If you don’t delete the file from the dllcache folder Windows will copy it back over to the System32 folder recreating the problem you were trying to fix.

Wednesday, May 31, 2006

All Hail The Java GUI!

Interesting resolution to a restore I just had thought I would pass it on... On one of our Win2K3 servers all admin accounts were crippled due to some corruption in the registry (my vote was on Gremlins!). So they wanted to restore the system state to a week ago. I tried and the client core dumped due to our permissions being crippled. So I tried one other thing and that is the Java GUI. I figured since it runs under the System Account it might work. Well it did and fixed their problems....I had not used the Java GUI in ages but here is one situation where it came in quite handy.

Tuesday, May 23, 2006

LAN-Free In A Shared Library Environment

I had to setup a LAN-Free client recently and ran into some trouble with the tape environment. As you all know I use a shared library environment where multiple TSM servers share a single large library through a library controller TSM instance. This works great since it allows for a shared scratch pool and TSM can be bounced faster since the DB is less than 2GB. The problem arises when trying to setup a LAN-Free agent in this complex environment. If you have ever read the directions for LAN-Free you’ll notice they can be quite confusing since they switch the client port to 1502 to accommodate the LAN-Free agent’s default of 1500. Now understand 1500 makes sense because the LAN-Free agent is really just a stripped down TSM server. Since the TSM server uses 1500 by default so does the agent. I decided to use port 1502 for the agent but forgot to change it in the dsmsta.opt file. This kept me from being able to connect to the agent from the client since it was looking at port 1502 and the agent was still using port 1500.

Another issue the directions don’t fully explain is where to point the LAN-Free agent when setting it up. I will explain how here and hopefully make it easy to understand.

1. Setup the dsm.sys client file with the following lines

ENABLELANFREE YES

LANFREECOMMMethod TCPIP

LANFREETCPServeraddress <loopback, DNS name, or IP>

LANFREETCPPort 1502

2. Define the agent to the node’s TSM server and the library controller as a server

define server storagent serverpassword=passw0rd hladdress=<loopback, DNS name, or IP> lladdress=1502

Also don’t forget to register the node if not registered already.

register node <nodename> <password> domain=<domain name>

3. On the TSM library controller instance path the drives seen by the node/LAN-Free agent machine. I am assuming you have connected the client node to the SAN environment and zoned a number of drives to the node. When seen in the OS you can define the path. Make sure you map the paths correctly. In AIX this can be done by running the lscfg –vp | more command and noting the drive serials and noting them to their corresponding drive in TSM.

DEFine PATH STORAGENT <Drive Name> SRCType=SERVER AUTODetect=YES DESTType=DRIVE LIBRary=<Library Name> DEVIce=/dev/rmtX ONLine=YES

Note: For ACSLS libraries more configuration parameters need to be set. I am showing an IBM hardware example since I would never use anything else!

4. On the client node go to the storage agent’s folder and update the dsmsta.opt file with the following

tcpport 1502

5. Now from that storage agent directory run the following command

dsmsta setstorageserver myname=storagent mypassword=passw0rd myhladdress=<loopback,DNS name, or IP> servername=<node’s tsm server IP or DNS name> lladdress=1500 <or port used by node’s tsm server>

Note: Notice I am pointing the agent to the node’s TSM server. Even though it is a library client and does not control the drives, by defining the agent to both the client TSM server and the controller instance a handoff from the library client to the library controller will occur automatically. If you do not define the LAN-Free agent to the library controller the agent will fail.

You should now be able to test the configuration by starting the dsmsta in the foreground and then from another telnet window start a TSM command line client and run a backup(if using Windows open a dsmc client). If setup correctly you’ll see the agent contact the controller for a drive and mount a tape and commence a LAN-Free backup.

Another issue the directions don’t fully explain is where to point the LAN-Free agent when setting it up. I will explain how here and hopefully make it easy to understand.

1. Setup the dsm.sys client file with the following lines

ENABLELANFREE YES

LANFREECOMMMethod TCPIP

LANFREETCPServeraddress <loopback, DNS name, or IP>

LANFREETCPPort 1502

2. Define the agent to the node’s TSM server and the library controller as a server

define server storagent serverpassword=passw0rd hladdress=<loopback, DNS name, or IP> lladdress=1502

Also don’t forget to register the node if not registered already.

register node <nodename> <password> domain=<domain name>

3. On the TSM library controller instance path the drives seen by the node/LAN-Free agent machine. I am assuming you have connected the client node to the SAN environment and zoned a number of drives to the node. When seen in the OS you can define the path. Make sure you map the paths correctly. In AIX this can be done by running the lscfg –vp | more command and noting the drive serials and noting them to their corresponding drive in TSM.

DEFine PATH STORAGENT <Drive Name> SRCType=SERVER AUTODetect=YES DESTType=DRIVE LIBRary=<Library Name> DEVIce=/dev/rmtX ONLine=YES

Note: For ACSLS libraries more configuration parameters need to be set. I am showing an IBM hardware example since I would never use anything else!

4. On the client node go to the storage agent’s folder and update the dsmsta.opt file with the following

tcpport 1502

5. Now from that storage agent directory run the following command

dsmsta setstorageserver myname=storagent mypassword=passw0rd myhladdress=<loopback,DNS name, or IP> servername=<node’s tsm server IP or DNS name> lladdress=1500 <or port used by node’s tsm server>

Note: Notice I am pointing the agent to the node’s TSM server. Even though it is a library client and does not control the drives, by defining the agent to both the client TSM server and the controller instance a handoff from the library client to the library controller will occur automatically. If you do not define the LAN-Free agent to the library controller the agent will fail.

You should now be able to test the configuration by starting the dsmsta in the foreground and then from another telnet window start a TSM command line client and run a backup(if using Windows open a dsmc client). If setup correctly you’ll see the agent contact the controller for a drive and mount a tape and commence a LAN-Free backup.

Labels:

drives,

Favorites,

lan-free,

Library Sharing,

Shared Library

Monday, May 08, 2006

TSM 5.2 End Of Service

I just received notification on end of service for TSM 5.2. The date given was April 30, 2007. So I would advise everyone to plan their upgrades to 5.3 or higher accordingly. For more information check out the IBM End Of Service webpage.

Thursday, May 04, 2006

UPDATE on NDMP Problem!

Support has identified the NDMP problem exists in TSM 5.3.3 if the ONTAP version is lower than 7.1. If you have NetApps make sure your ONTAP version is at or higher than version 7.1 before you upgrade your TSM server to 5.3.3 or higher.

Wednesday, May 03, 2006

TSM 5.3.3 NDMP Issue!

We discovered a problem with TSM 5.3.3 and failed NDMP backups. It seemed to be occurring on large volumes. After investigating the issue support was called and through further investigation it was discovered that there is a bug in the implementation of TSM’s use of the NMDP 4 protocol. This bug will cause some NDMP backups to fail when the backup spans volumes (i.e. uses more than one tape to do the backup of the volume). The suggestion is to either stay at version 5.3.2 or to use the following option in the dsmserv.opt file:

(Note: there is no problem with data integrity of backups that show completed)

FORCENDMPVERSION3 YES

This option will use the older NDMP protocol and should resolve the failures until a fix is released.

(Note: there is no problem with data integrity of backups that show completed)

FORCENDMPVERSION3 YES

This option will use the older NDMP protocol and should resolve the failures until a fix is released.

Thursday, April 27, 2006

Interesting Command Update In TSM

I found this interesting update on the Tivoli website about the enhancements to the DISABLE/ENABLE SESSIONS command. Basically it discusses the change to the commands in TSM 5.3.1.0 and how the command now disables sessions not only for client sessions, but admin and server-to-server sessions also. This would not be problem had a customer not revoked system authority from his SERVER_CONSOLE ID. This locked the customer out of his server entirely and his only recourse was to either rollback to 5.3.0 or to restore a DB Backup from before the disable was issued. Have many of you revoked SERVER_CONSOLE privileges? I never would consider it since it is a fail-safe in the event something bad happens. I understand security but if the person can get onto the server and start the program in the foreground you have bigger problems than the SERVER_CONSOLE ID having system authority.

Thursday, April 13, 2006

TSM Is Not A Security Tool!

I thought I would pass along some of the crazier things I have seen with TSM and get your input to see who has the wildest/stupidest story concerning TSM. My most recent experience is when I was ask to setup scripts to alert security personnel when a specific file was changed and backed up. Reason? If the file was changed it showed that the servers had been compromised. I, of course, stated without reservation NO! TSM is not a security tool and will not be used as one. That’s what security-monitoring tools are for! Then there was the time we were asked to provide a hard copy list of every file in backup storage. (That means a printed list of every file on tape. I kid you not!) This insane request was from a customer who had no idea what they were asking. When told what the results would be they insisted on having the list provided. We were floored, what idiot is going to sift through the mounds of paper and identify every file? Of course there is always the requests that come in from people wanting data from the 70’s, 80’s, and a lot from the 90’s. If I had a REEL to REEL machine or older tape hardware, I still wouldn’t have the foggiest idea how to restore the data since the computers the data was from are long gone and what tools were used to backup the data are probably buried with the person who once used those archaic tools (You mainframe people crack me up!). Have you ever seen Real Genius? If you haven’t then I suggest it for a good laugh. There is one character in particular that I love, Lazlo Hollyfeld. Played by Jon Gries, Lazlo is a scientist at a MIT/CIT like school that lives in the closet of the dorm room of the lead characters who suffered from a breakdown and is not quit living in reality. I work with a Lazlo! I swear he looks like Lazlo and when he goes into his cube it reminds me of him. I say this because he is a “Mainframer” and laughs and scoffs at us Open Systems people. Even he didn’t have a clue how data from that long ago could be restored since none of the software or hardware was available. Then there are always the requests where the person needs data restored that is missing. What data? They are not sure! What was the name or do they know some portion of the name or extension? No! How long has it been gone? Not sure! They think it was from 4-5 years ago. These requests kill me! Even if I was willing to go look at the old archives of the systems I still wouldn’t have any idea what the customer was really looking for. These are just some of the things I have run into and I am sure you all have many more great stories! Please share and let others feel your pain and enjoy a good laugh.

Monday, April 03, 2006

TSM Client Issue!

I have been informed by Tivoli support personnel that a critical issue has been identified in the TSM backup/archive client that could affect data integrity. The issue involves the RESOURCEUTILIZATION option and how it works when backing up to tape. If you have RESCOURCEUTIL set higher than the default and the client backs up directly to tape there is an identified issue of data not being backed up, or archived, and incorrect deletion of files that were not archived without an error message being issued.

If any of the following conditions are met you are NOT affected by the problem:

A fix is scheduled for release at the end of April, in the meantime make sure you either set any client using a higher RESOURCEUTIL value to 2, or send the client's backup to disk. Also note that any backup to disk using the FILE devclass is impacted by this problem as it affects all sequential media backup types.

I have provided a link to the APAR listing at the Tivoli website.

If any of the following conditions are met you are NOT affected by the problem:

- The RESOURCEUTILIZATION client option is either not explicitly set, or is set to 1 or 2. This option can be set either in the client option set or schedule on the server, or in the local client options.

- Data for the client node is only stored in random-access disk storage pools (such as the preconfigured storage pool, BACKUPPOOL).

- Image backup is used.

- NDMP backup (initiated by client or server) is used.

- Only the API client is used (including programs and products that use only the API client - i.e. TDP's).

A fix is scheduled for release at the end of April, in the meantime make sure you either set any client using a higher RESOURCEUTIL value to 2, or send the client's backup to disk. Also note that any backup to disk using the FILE devclass is impacted by this problem as it affects all sequential media backup types.

I have provided a link to the APAR listing at the Tivoli website.

Thursday, March 23, 2006

Oracle TDP and HACMP 5.3 Incompatibility Resolved

Well I was have a heck of a time with this problem. It seems the 5.3 HACMP binaries are incompatible with the Oracle 64-bit API so the TDP would crash when attempting to backup a DB on a cluster. I was expecting a fix and was told it would be available by January. So I waited, and watched and never saw an update to the TDP. Frustration mounted until I was able to get ahold of a TDP developer who pointed out that it was the HACMP side that had to do the patching and now all is well. So if you are looking to use HACMP and backup Oracle DB's with the TDP make sure they have the latest patch levels installed or the 64-bit API will not work.

Thursday, March 16, 2006

TSMExpress Update

Yesterday I sat through a TSMExpress presentation and thought I would explain the product to those of you interested. First off TSMExpress is a Windows only product and has limited support for applications (basically Exchange and SQL). TSMExpress will only support a 20GB DB and ALL BACKUPS GO TO DISK! Yes that’s right; there is no direct backup to tape option. What TSMExpress utilizes is the backupset function that has been updated to enhance the backupset catalogue to be more like BackupExec or Arcserve. This is how TSMExpress will handle the Grandfather, Father, Son process of backup. Here is how it works, TSMExpress backs up all data to disk and keeps the data on disk for up to 14 days. What I haven’t got an answer to involves the disk pools and their use of the FILE device class. TSMExpress, from what the presentation showed, use the normal TSM disk pools but instead uses the FILE device class. According to the presentation the FILE device class can span drives (currently on TSM it cannot since you have to state a single drive/folder location for the files to be created.

TSMExpress’ tape creation and handling is also a little different in that is doesn’t use tape pools like we know them in TSM. There are no primary and copy tape pools, to put the data out to tape you generate a backupset (they call it a media copy). TSMExpress allows for multiple backupsets on a tape so tape usage won’t be as bad as you might have been thinking, and they have enhanced the restore process so backupsets can be explored on the file level through the GUI. The other nice feature is that if the TSMExpress DB was ever corrupted or unable to be restored it can be recreated through the backupset catalogues (interesting feature). So once the data is on tape you send it offsite and the backupsets become your DR solution.

TSMExpress is expected to be upgradeable to TSM by next year but currently it cannot upgrade to its beefier older sibling. The backupsets are transferable, however, so you can move data between products in that way. The web interface is also a “Dummies” version and is very easy to work with as you can see it’s pretty basic. What I didn’t hear was whether the web interface was integrated or part of the ISC. I will have to ask around on that one. Overall I would say TSMExpress looks to be a good product to fill a much needed role for the SMB market.

Monday, March 13, 2006

How To Setup A Library Manager Instance (Revised)

I had to revise this as I left out the checkin of the tapes before the audit. I had to do this process this weekend as we moved to a new library and library manager and I realized I left out the tape checkin.

I was recently asked how to setup a dedicated TSM Library Manager instance to resolve issues with using a production backup instance as the manager. There are definite benefits to using a dedicated instance like performance and manageability. Performance wise TSM has DB issues and when large tasks are running all around performance is impacted. This impact affects library usage and so having the dedicated library manager allows for a smaller DB and no heavy tasks to bog down all the mount requests. Manageability is increased in that all you need to worry about are the drives and their paths. That’s right, no backups to worry about (except the DB) and even if you lost the systems DB backup and had to start from scratch the other servers sharing this instance could easily reconcile their inventory and you would not lose any server data (so it’s also a lot safer to use than relying on a production TSM instance). If this instance is on a dedicated server also, do not backup the server to the library manager, back it up to one of the other instances. NO BACKUPS OR ARCHIVES SHOULD BE DONE TO THIS INSTANCE. The key to the dedicated instance is keeping the DB as small as possible and this is easily done if nothing else in run on the instance. When creating our library manager instance I was unsure how large to make the DB so I made it 2GB, and it currently is 4.6% utilized. The instance starts fast and cycling it for updates or when problems occur keeps downtime to a minimum. Ok so here are the steps to setup the instance, just remember there will be an outage needed on the current TSM library manager as you remove drives and paths.

Step 1: Create the dedicated instance

You can create the instance fairly quickly by creating a subdirectory on the TSM server like so:

mkdir /usr/tivoli/tsm/libserv/bin

Copy the dsmserv.opt and if planning to use the old web interface the dsmserv.idl file to this new directory. Then define a DB and Log volume either using raw logical volumes or us dsmfmt to format one. Depending on your library size I would make it 500MB to be safe. At that size its space should not be too much of a premium. The log can be half that and still function fine. If you want to be extra careful you can be like me and make it 1-2GB but again my DB is barely used at that size. Once you have created the volumes then you need to run dsmserv format to initialize the instance. If the instance is going to be on a server with other TSM instances you’ll need to export TSM variables so they point to the new /usr/tivoli/tsm/libserv/bin directory. Export the following variables before running the dsmserv format command:

export DSMSERV_CONFIG =/usr/tivoli/tsm/libserv/bin/dsmserv.opt

export DSMSERV_DIR=/usr/tivoli/tsm/server/bin

export DSMSERV_ACCOUNTING_DIR=/usr/tivoli/tsm/libserv/bin

Now you can run dsmserv format and the instance will be initialized and ready to be used. In the ../libserv/bin directory you should now have a dsmserv.dsk file that lists this instance’s DB and Log files. You can at this point either use the instance as is or load the old web interface definitions so that they are available to those who prefer it over the ISC. Once ready to use you can now start the instance and setup its settings for Server-to-Server communication. Remember the other TSM instances have to be able to talk to this instance. Make sure you set the HL and LL address, set the server password, set the server name, the URL, and don’t forget to set crossdefine to ON.

Note: The next step can be done ahead of time if you can allow for a longer library outage.

Once you have set the parameters you feel are necessary, whether for security or event and actlog retention, you can then move over to the current TSM library manager and begin removing the paths and then the drives (in that order). TSM does not like conflicts so don’t try to define the drives on the new Library Manager ahead of time as you could cause serious problems with the library. Delete all paths and drives then delete the library path and finally delete the library itself. You can no longer use this library definition since it is an automated library and when sharing the library the non-library manager TSM instances use a Shared library definition.

Step 2: Define server to server communication for all servers sharing the library

This is quite easy and I wont cover this much as this is fairly straightforward. On all servers that will be sharing the library make sure you have set their server name, server password, HL and LL address, URL, and set crossdefine to ON. Then (using the old web interface) on the servers that will be library clients select Object View -> Server -> Other Servers. Here you can see the servers defined and define new servers. Select Define Server from the drop down menu and then put in the new library managements info into the corresponding fields. Make sure you crossdefine and then your ready to setup the library.

Step 3: Define drives and paths on new library manager

Back on the new library manager instance you can now define the library and the drives and paths. Make sure when defining the new automated library you select the shared option to allow the library to be shared by other instances otherwise it will be accessible only to this instance.

DEFINE LIBRARY ATL01 LIBTYPE=SCSI SHARED=YES AUTOLABEL=YES

Now that the library is defined you need to define the library path and all the drives and their respective paths, this includes the path definitions for every other TSM server that will be using this library. Remember no drive or path definitions are made on the library clients. The only definitions for the library clients are paths on the library manager. Once the drives and paths are defined you will need to checkin the tapes and audit the library. The library manager should see the tapes in the library when you decide to check them in and now you can progress to the next step. If you have tapes that are moving from another library check them in at this time. You have to make a decision at this point to either checkin the tapes now or wait until the other servers have completed Step 4. When you check them in you should check them in as private then after each TSM server reacquires its tapes you can update the private tapes not owned by any TSM server to scratch. This can be seen when you do a Q LIBVOL and the easiest way to do this is to use a select call to create a list then run the list against a shell script to update each tape.

Here is the select command:

SELECT VOLUME_NAME FROM LIBVOLUMES WHERE OWNER IS NULL

Here is the shell script I use:

echo PROCESSING.........

while read LINE

do

dsmadmc -id=<admin id> -pa=<admin password> upd libvol <library name> $LINE status=scratch

echo $LINE updated

done < $1

Step 4: Define shared library and update device classes

Now that the library clients can communicate with the new library manager you will need to define a new library definition. Know that you can reuse the name from the old library settings you deleted if you like. Here we will be setting up a shared library definition (again from the old web admin interface); select Object View -> Server Storage -> Libraries and Drives -> Shared Libraries. From the drop down menu select Define Shared Library and fill out the needed information of Library Name and Primary Library Manager. The primary library manager info should point to the server name you defined for the new library manager instance. Since the server name you defined has all the TCP/IP and port settings it is important you use the same name as the name given to the new library manager. Once the library definition is created you will need to update the device classes on each library client to use the new library. You can use the old web admin interface selecting Object View -> Server Storage -> Device Classes then select the device class type that was in use (i.e. LTO device class) and update each device class by selecting each one then selecting the drop down menu and choose Update Device Class. Update the library name field and if needed set the mount limit if you don’t want this server to be able to use all the drives in the library. Setting the mount limit is an easy way to pseudo-partition the library when its shared. I highly recommend using the mount limit option to allow each system some dedicated access to drives without having to designate specific drives using set path definitions for each library client.

Step 5: Run AUDIT LIBRARY on each TSM library client

Now that the library clients can see the library manager they need to reconcile the library inventory so they can use their tapes. This can be done by running the audit library command AUDIT LIBRARY <SHARED LIBRARY> CHECKLABEL=YES. This will allow each library client to contact the TSM Library Manager and tell it to reassign its tapes back. This audit should be done periodically to make sure each TSM server sees correct volhistory information. If once the volumes have been reconciled for all TSM library clients and you do not see any scratch, you can run a check-in to allow the scratch volumes to be recognized. One thing to be aware of, on the old TSM library manager you will need to delete all volumes listed as remote. This will free up tapes to go to scratch otherwise you’ll have “phantom” tapes that have no data, are not used in any storage pool for any servers, but will show assigned to the old library manager instance. You can run the following command to delete them from your volume history, unfortunately there is no blanket command that seems to work to remove them all at once:

DELete VOLHistory TODate=TODAY Type=REMOTE VOLume=NT1904 FORCE=Yes

What you will want to do is generate a list of volumes from the old library manager that are in REMOTE status and then run that list through this command to delete the volumes from its volume history file allowing them to go back to scratch status when needed.

I hope you have all found this helpful and if I missed a step please feel free to add your input.

I was recently asked how to setup a dedicated TSM Library Manager instance to resolve issues with using a production backup instance as the manager. There are definite benefits to using a dedicated instance like performance and manageability. Performance wise TSM has DB issues and when large tasks are running all around performance is impacted. This impact affects library usage and so having the dedicated library manager allows for a smaller DB and no heavy tasks to bog down all the mount requests. Manageability is increased in that all you need to worry about are the drives and their paths. That’s right, no backups to worry about (except the DB) and even if you lost the systems DB backup and had to start from scratch the other servers sharing this instance could easily reconcile their inventory and you would not lose any server data (so it’s also a lot safer to use than relying on a production TSM instance). If this instance is on a dedicated server also, do not backup the server to the library manager, back it up to one of the other instances. NO BACKUPS OR ARCHIVES SHOULD BE DONE TO THIS INSTANCE. The key to the dedicated instance is keeping the DB as small as possible and this is easily done if nothing else in run on the instance. When creating our library manager instance I was unsure how large to make the DB so I made it 2GB, and it currently is 4.6% utilized. The instance starts fast and cycling it for updates or when problems occur keeps downtime to a minimum. Ok so here are the steps to setup the instance, just remember there will be an outage needed on the current TSM library manager as you remove drives and paths.

Step 1: Create the dedicated instance

You can create the instance fairly quickly by creating a subdirectory on the TSM server like so:

mkdir /usr/tivoli/tsm/libserv/bin

Copy the dsmserv.opt and if planning to use the old web interface the dsmserv.idl file to this new directory. Then define a DB and Log volume either using raw logical volumes or us dsmfmt to format one. Depending on your library size I would make it 500MB to be safe. At that size its space should not be too much of a premium. The log can be half that and still function fine. If you want to be extra careful you can be like me and make it 1-2GB but again my DB is barely used at that size. Once you have created the volumes then you need to run dsmserv format to initialize the instance. If the instance is going to be on a server with other TSM instances you’ll need to export TSM variables so they point to the new /usr/tivoli/tsm/libserv/bin directory. Export the following variables before running the dsmserv format command:

export DSMSERV_CONFIG =/usr/tivoli/tsm/libserv/bin/dsmserv.opt

export DSMSERV_DIR=/usr/tivoli/tsm/server/bin

export DSMSERV_ACCOUNTING_DIR=/usr/tivoli/tsm/libserv/bin

Now you can run dsmserv format and the instance will be initialized and ready to be used. In the ../libserv/bin directory you should now have a dsmserv.dsk file that lists this instance’s DB and Log files. You can at this point either use the instance as is or load the old web interface definitions so that they are available to those who prefer it over the ISC. Once ready to use you can now start the instance and setup its settings for Server-to-Server communication. Remember the other TSM instances have to be able to talk to this instance. Make sure you set the HL and LL address, set the server password, set the server name, the URL, and don’t forget to set crossdefine to ON.

Note: The next step can be done ahead of time if you can allow for a longer library outage.

Once you have set the parameters you feel are necessary, whether for security or event and actlog retention, you can then move over to the current TSM library manager and begin removing the paths and then the drives (in that order). TSM does not like conflicts so don’t try to define the drives on the new Library Manager ahead of time as you could cause serious problems with the library. Delete all paths and drives then delete the library path and finally delete the library itself. You can no longer use this library definition since it is an automated library and when sharing the library the non-library manager TSM instances use a Shared library definition.

Step 2: Define server to server communication for all servers sharing the library

This is quite easy and I wont cover this much as this is fairly straightforward. On all servers that will be sharing the library make sure you have set their server name, server password, HL and LL address, URL, and set crossdefine to ON. Then (using the old web interface) on the servers that will be library clients select Object View -> Server -> Other Servers. Here you can see the servers defined and define new servers. Select Define Server from the drop down menu and then put in the new library managements info into the corresponding fields. Make sure you crossdefine and then your ready to setup the library.

Step 3: Define drives and paths on new library manager

Back on the new library manager instance you can now define the library and the drives and paths. Make sure when defining the new automated library you select the shared option to allow the library to be shared by other instances otherwise it will be accessible only to this instance.

DEFINE LIBRARY ATL01 LIBTYPE=SCSI SHARED=YES AUTOLABEL=YES

Now that the library is defined you need to define the library path and all the drives and their respective paths, this includes the path definitions for every other TSM server that will be using this library. Remember no drive or path definitions are made on the library clients. The only definitions for the library clients are paths on the library manager. Once the drives and paths are defined you will need to checkin the tapes and audit the library. The library manager should see the tapes in the library when you decide to check them in and now you can progress to the next step. If you have tapes that are moving from another library check them in at this time. You have to make a decision at this point to either checkin the tapes now or wait until the other servers have completed Step 4. When you check them in you should check them in as private then after each TSM server reacquires its tapes you can update the private tapes not owned by any TSM server to scratch. This can be seen when you do a Q LIBVOL and the easiest way to do this is to use a select call to create a list then run the list against a shell script to update each tape.

Here is the select command:

SELECT VOLUME_NAME FROM LIBVOLUMES WHERE OWNER IS NULL

Here is the shell script I use:

echo PROCESSING.........

while read LINE

do

dsmadmc -id=<admin id> -pa=<admin password> upd libvol <library name> $LINE status=scratch

echo $LINE updated

done < $1

Step 4: Define shared library and update device classes

Now that the library clients can communicate with the new library manager you will need to define a new library definition. Know that you can reuse the name from the old library settings you deleted if you like. Here we will be setting up a shared library definition (again from the old web admin interface); select Object View -> Server Storage -> Libraries and Drives -> Shared Libraries. From the drop down menu select Define Shared Library and fill out the needed information of Library Name and Primary Library Manager. The primary library manager info should point to the server name you defined for the new library manager instance. Since the server name you defined has all the TCP/IP and port settings it is important you use the same name as the name given to the new library manager. Once the library definition is created you will need to update the device classes on each library client to use the new library. You can use the old web admin interface selecting Object View -> Server Storage -> Device Classes then select the device class type that was in use (i.e. LTO device class) and update each device class by selecting each one then selecting the drop down menu and choose Update Device Class. Update the library name field and if needed set the mount limit if you don’t want this server to be able to use all the drives in the library. Setting the mount limit is an easy way to pseudo-partition the library when its shared. I highly recommend using the mount limit option to allow each system some dedicated access to drives without having to designate specific drives using set path definitions for each library client.

Step 5: Run AUDIT LIBRARY on each TSM library client

Now that the library clients can see the library manager they need to reconcile the library inventory so they can use their tapes. This can be done by running the audit library command AUDIT LIBRARY <SHARED LIBRARY> CHECKLABEL=YES. This will allow each library client to contact the TSM Library Manager and tell it to reassign its tapes back. This audit should be done periodically to make sure each TSM server sees correct volhistory information. If once the volumes have been reconciled for all TSM library clients and you do not see any scratch, you can run a check-in to allow the scratch volumes to be recognized. One thing to be aware of, on the old TSM library manager you will need to delete all volumes listed as remote. This will free up tapes to go to scratch otherwise you’ll have “phantom” tapes that have no data, are not used in any storage pool for any servers, but will show assigned to the old library manager instance. You can run the following command to delete them from your volume history, unfortunately there is no blanket command that seems to work to remove them all at once:

DELete VOLHistory TODate=TODAY Type=REMOTE VOLume=NT1904 FORCE=Yes

What you will want to do is generate a list of volumes from the old library manager that are in REMOTE status and then run that list through this command to delete the volumes from its volume history file allowing them to go back to scratch status when needed.

I hope you have all found this helpful and if I missed a step please feel free to add your input.

Labels:

Favorites,

Library Controller,

Library Sharing,

TSM

Monday, February 27, 2006

NIM vs. SysBack for DRP